After five months of posting monthly, I cannot resist the temptation to slip in an extra one. Mainly because I want to let you know about the Pint of Science Festival taking place next week. In Liverpool we have organised a series of three evenings at the Philharmonic pub on Hope Street featuring talks by engineers from the School of Engineering and the Institute for Digital Engineering and Autonomous Systems (IDEAS) at the University of Liverpool. I am planning to talk about digital twins – what they are, how we can use them, what they might become and whether we are already part of a digital world. If you enjoyed reading my posts on ‘Digital twins that thrive in the real world’, ‘Dressing up your digital twin’, and ‘Are we in a simulation?’ then come and discuss digital twins with me in person. My talk is part of a programme on Digital with Everything on May 15th. On May 13th and 14th we have programmes on Engineering in Nature and Science of Vision, Colliders and Crashes, respectively. I hope you can come and join us in the real-world.

After five months of posting monthly, I cannot resist the temptation to slip in an extra one. Mainly because I want to let you know about the Pint of Science Festival taking place next week. In Liverpool we have organised a series of three evenings at the Philharmonic pub on Hope Street featuring talks by engineers from the School of Engineering and the Institute for Digital Engineering and Autonomous Systems (IDEAS) at the University of Liverpool. I am planning to talk about digital twins – what they are, how we can use them, what they might become and whether we are already part of a digital world. If you enjoyed reading my posts on ‘Digital twins that thrive in the real world’, ‘Dressing up your digital twin’, and ‘Are we in a simulation?’ then come and discuss digital twins with me in person. My talk is part of a programme on Digital with Everything on May 15th. On May 13th and 14th we have programmes on Engineering in Nature and Science of Vision, Colliders and Crashes, respectively. I hope you can come and join us in the real-world.

Tag Archives: simulation

More on fairy lights and volume decomposition (with ice cream included)

Last June, I wrote about representing five-dimensional data using a three-dimensional stack of transparent cubes containing fairy lights whose brightness varied with time and also using feature vectors in which the data are compressed into a relatively short string of numbers [see ‘Fairy lights and decomposing multi-dimensional datasets’ on June 14th, 2023]. After many iterations, we have finally had an article published describing our method of orthogonally decomposing multi-dimensional data arrays using Chebyshev polynomials. In this context, orthogonal means that components of the resultant feature vector are statistically independent of one another. The decomposition process consists of fitting a particular form of polynomials, or equations, to the data by varying the coefficients in the polynomials. The values of the coefficients become the components of the feature vector. This is what we do when we fit a straight line of the form y=mx+c to set of values of x and y and the coefficients are m and c which can be used to compare data from different sources, instead of the datasets themselves. For example, x and y might be the daily sales of ice cream and the daily average temperature with different datasets relating to different locations. Of course, it is much harder for data that is non-linear and varying with w, x, y and z, such as the intensity of light in the stack of transparent cubes with fairy lights inside. In our article, we did not use fairy lights or icecream sales, instead we compared the measurements and predictions in two case studies: the internal stresses in a simple composite specimen and the time-varying surface displacements of a vibrating panel.

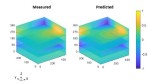

Last June, I wrote about representing five-dimensional data using a three-dimensional stack of transparent cubes containing fairy lights whose brightness varied with time and also using feature vectors in which the data are compressed into a relatively short string of numbers [see ‘Fairy lights and decomposing multi-dimensional datasets’ on June 14th, 2023]. After many iterations, we have finally had an article published describing our method of orthogonally decomposing multi-dimensional data arrays using Chebyshev polynomials. In this context, orthogonal means that components of the resultant feature vector are statistically independent of one another. The decomposition process consists of fitting a particular form of polynomials, or equations, to the data by varying the coefficients in the polynomials. The values of the coefficients become the components of the feature vector. This is what we do when we fit a straight line of the form y=mx+c to set of values of x and y and the coefficients are m and c which can be used to compare data from different sources, instead of the datasets themselves. For example, x and y might be the daily sales of ice cream and the daily average temperature with different datasets relating to different locations. Of course, it is much harder for data that is non-linear and varying with w, x, y and z, such as the intensity of light in the stack of transparent cubes with fairy lights inside. In our article, we did not use fairy lights or icecream sales, instead we compared the measurements and predictions in two case studies: the internal stresses in a simple composite specimen and the time-varying surface displacements of a vibrating panel.

The image shows the normalised out-of-plane displacements as the colour as a function of time in the z-direction for the surface of a panel represented by the xy-plane.

Source:

Amjad KH, Christian WJ, Dvurecenska KS, Mollenhauer D, Przybyla CP, Patterson EA. Quantitative Comparisons of Volumetric Datasets from Experiments and Computational Models. IEEE Access. 11: 123401-123417, 2023.

Work, rest and play in Smallville

I am comfortable with the lack of certainty about us not being in a simulation [see ‘Are we in a simulation?‘ on September 28, 2022]. However, I know that some of you would prefer not to consider this possibility. Unfortunately, recently published research has likely increased the probability that we are in a simulation because the researchers set up a simulation of a community of human-like agents called Smallville [Park et al, 2023]. The generative agents fuse large language models used in artificial intelligence with computational, interactive agents who eat, sleep, work and play just like humans and coalesce into social groups. The simulation was created as a research tool for studying human interactions and emergent social behaviour which completely concurs with the argument for us already being part of a simulation created to study social behaviour. Smallville only had 25 virtual inhabitants but the speed of advances in artificial intelligence and computational tools perhaps implies that a simulation of billions of agents (people) is not as far in the future as we once thought thus making it more credible that we are in a simulation. The emergent social behaviour observed in Smallville suggests that our society is essentially a self-organising complex system that cannot be micro-managed from the centre.

I am comfortable with the lack of certainty about us not being in a simulation [see ‘Are we in a simulation?‘ on September 28, 2022]. However, I know that some of you would prefer not to consider this possibility. Unfortunately, recently published research has likely increased the probability that we are in a simulation because the researchers set up a simulation of a community of human-like agents called Smallville [Park et al, 2023]. The generative agents fuse large language models used in artificial intelligence with computational, interactive agents who eat, sleep, work and play just like humans and coalesce into social groups. The simulation was created as a research tool for studying human interactions and emergent social behaviour which completely concurs with the argument for us already being part of a simulation created to study social behaviour. Smallville only had 25 virtual inhabitants but the speed of advances in artificial intelligence and computational tools perhaps implies that a simulation of billions of agents (people) is not as far in the future as we once thought thus making it more credible that we are in a simulation. The emergent social behaviour observed in Smallville suggests that our society is essentially a self-organising complex system that cannot be micro-managed from the centre.

Sources:

Oliver Roeder, Keeping up with the ChatGPT neighbours, FT Weekend, August 26/27 2023.

Camilla Cavendish, Charities could lead new age of community spirit, FT Weekend, August 26/27 2023.

Park JS, O’Brien JC, Cai CJ, Morris MR, Liang P, Bernstein MS. Generative agents: Interactive simulacra of human behavior. arXiv preprint arXiv:2304.03442. 2023.

Image: Ceramic tile by Pablo Picasso in museum in Port de Sóller Railway Station, Mallorca.

Predicting release rates of hydrogen from stainless steel

The influence of hydrogen on the structural integrity of nuclear power plant, where water molecules in the coolant circuit can be split by electrolysis or radiolysis to produce hydrogen, has been a concern to engineers for decades. However, plans for a hydrogen economy and commercial fusion reactors, in which plasma-facing structural components will likely be exposed to hydrogen, has accelerated interest in understanding the complex interactions of hydrogen with metals, especially in the presence of irradiation. A key step in advancing our understanding of these interactions is the measurement and prediction of the uptake and release of hydrogen by key structural materials. We have recently published a study in Scientific Reports in which we developed a method for predicting the amount hydrogen in a steel under test conditions. We used a sample of stainless steel as an electrode (cathode) in an electrolysis cell that split water molecules producing hydrogen atoms that were attracted to the steel. After loading the steel with hydrogen in the cell, we measured the rate of release of the hydrogen from the steel over two minutes by monitoring the drop in current in the cell, using a technique called potentiostatic discharge. We used our measurements to calibrate a model of hydrogen release rate, based on Fick’s second law of diffusion, which relates the rate of hydrogen motion (diffusion) to the surface area perpendicular to the motion and the concentration gradient in the direction of motion. Finally, we used our calibrated model to predict the release rate of hydrogen over 24 hours and checked our predictions using a second measurement based on the hydrogen released when the steel was melted. So, now we have a method of predicting the amount of hydrogen in a steel remaining in a sample many hours after exposure during electrolysis without destroying the test sample. This will allow us to perform better defined tests on the influence of hydrogen on the performance of stainless steel in the extreme environments of fission and fusion reactors.

The influence of hydrogen on the structural integrity of nuclear power plant, where water molecules in the coolant circuit can be split by electrolysis or radiolysis to produce hydrogen, has been a concern to engineers for decades. However, plans for a hydrogen economy and commercial fusion reactors, in which plasma-facing structural components will likely be exposed to hydrogen, has accelerated interest in understanding the complex interactions of hydrogen with metals, especially in the presence of irradiation. A key step in advancing our understanding of these interactions is the measurement and prediction of the uptake and release of hydrogen by key structural materials. We have recently published a study in Scientific Reports in which we developed a method for predicting the amount hydrogen in a steel under test conditions. We used a sample of stainless steel as an electrode (cathode) in an electrolysis cell that split water molecules producing hydrogen atoms that were attracted to the steel. After loading the steel with hydrogen in the cell, we measured the rate of release of the hydrogen from the steel over two minutes by monitoring the drop in current in the cell, using a technique called potentiostatic discharge. We used our measurements to calibrate a model of hydrogen release rate, based on Fick’s second law of diffusion, which relates the rate of hydrogen motion (diffusion) to the surface area perpendicular to the motion and the concentration gradient in the direction of motion. Finally, we used our calibrated model to predict the release rate of hydrogen over 24 hours and checked our predictions using a second measurement based on the hydrogen released when the steel was melted. So, now we have a method of predicting the amount of hydrogen in a steel remaining in a sample many hours after exposure during electrolysis without destroying the test sample. This will allow us to perform better defined tests on the influence of hydrogen on the performance of stainless steel in the extreme environments of fission and fusion reactors.

Source:

Weihrauch M, Patel M, Patterson EA. Measurements and predictions of diffusible hydrogen escape and absorption in cathodically charged 316LN austenitic stainless steel. Scientific Reports. 13(1):10545, 2023.

Image:

Figure 2a from Weihrauch et al , 2023 showing electrolysis cell setup for potentiostatic discharge experiments.