After five months of posting monthly, I cannot resist the temptation to slip in an extra one. Mainly because I want to let you know about the Pint of Science Festival taking place next week. In Liverpool we have organised a series of three evenings at the Philharmonic pub on Hope Street featuring talks by engineers from the School of Engineering and the Institute for Digital Engineering and Autonomous Systems (IDEAS) at the University of Liverpool. I am planning to talk about digital twins – what they are, how we can use them, what they might become and whether we are already part of a digital world. If you enjoyed reading my posts on ‘Digital twins that thrive in the real world’, ‘Dressing up your digital twin’, and ‘Are we in a simulation?’ then come and discuss digital twins with me in person. My talk is part of a programme on Digital with Everything on May 15th. On May 13th and 14th we have programmes on Engineering in Nature and Science of Vision, Colliders and Crashes, respectively. I hope you can come and join us in the real-world.

After five months of posting monthly, I cannot resist the temptation to slip in an extra one. Mainly because I want to let you know about the Pint of Science Festival taking place next week. In Liverpool we have organised a series of three evenings at the Philharmonic pub on Hope Street featuring talks by engineers from the School of Engineering and the Institute for Digital Engineering and Autonomous Systems (IDEAS) at the University of Liverpool. I am planning to talk about digital twins – what they are, how we can use them, what they might become and whether we are already part of a digital world. If you enjoyed reading my posts on ‘Digital twins that thrive in the real world’, ‘Dressing up your digital twin’, and ‘Are we in a simulation?’ then come and discuss digital twins with me in person. My talk is part of a programme on Digital with Everything on May 15th. On May 13th and 14th we have programmes on Engineering in Nature and Science of Vision, Colliders and Crashes, respectively. I hope you can come and join us in the real-world.

Category Archives: Engineering

Highest mountain, deepest lake, smallest church and biggest liar

Last month we took a short vacation in the Lake District and stayed in Wasdale whose tag-line is highest mountain, deepest lake. The mountain is Scafell Pike, the highest mountain in England at 978 m, which we never saw because the clouds never lifted high enough to reveal it. The lake is Wast Water, the deepest lake in England at 74 m, which rose slowly during our week due to the almost continuous rain falling on the surrounding hills. But that’s typical Lake District weather because the area protrudes to the west of England so it is the first landfall for rainstorms moving east after they have replenished with water over the Irish Sea. We spent our time reading in our cottage and venturing out to walk in lowlands when the lake was a calm presence, occasionally reflecting the surrounding mountains but more often dark reflecting the low clouds. We were not tempted to test its temperature but I would expect it to have been around 4 °C because this is the temperature of the water in the depths of all deep lakes all year around. Hence, in winter the surface layers of water will usually be colder than 4 °C and in summer warmer than 4 °C reflecting the air temperature, so in spring when we visited it would probably have been around 4 °C. Water expands when it freezes which is possible on the surface of bodies of water where it can expand into the air; however, at depths in deep lakes the pressure prevents the expansion required for the freezing process and equilibrium between opposing processes occurs at about 4 °C. Thus, the water at the bottom of all deep lakes remains at 4 °C all year with a gradient of increasing temperatures towards the surface in summer and of decreasing temperatures in winter.

Last month we took a short vacation in the Lake District and stayed in Wasdale whose tag-line is highest mountain, deepest lake. The mountain is Scafell Pike, the highest mountain in England at 978 m, which we never saw because the clouds never lifted high enough to reveal it. The lake is Wast Water, the deepest lake in England at 74 m, which rose slowly during our week due to the almost continuous rain falling on the surrounding hills. But that’s typical Lake District weather because the area protrudes to the west of England so it is the first landfall for rainstorms moving east after they have replenished with water over the Irish Sea. We spent our time reading in our cottage and venturing out to walk in lowlands when the lake was a calm presence, occasionally reflecting the surrounding mountains but more often dark reflecting the low clouds. We were not tempted to test its temperature but I would expect it to have been around 4 °C because this is the temperature of the water in the depths of all deep lakes all year around. Hence, in winter the surface layers of water will usually be colder than 4 °C and in summer warmer than 4 °C reflecting the air temperature, so in spring when we visited it would probably have been around 4 °C. Water expands when it freezes which is possible on the surface of bodies of water where it can expand into the air; however, at depths in deep lakes the pressure prevents the expansion required for the freezing process and equilibrium between opposing processes occurs at about 4 °C. Thus, the water at the bottom of all deep lakes remains at 4 °C all year with a gradient of increasing temperatures towards the surface in summer and of decreasing temperatures in winter.

Wasdale also claims the smallest church, St Olaf’s and the biggest liar, Will Ritson (1808-1890) who was a landlord of the Wastwater Hotel. He won the annual world’s biggest liar competition by saying, when it was his turn, that he was withdrawing from the competition because having heard the other competitors he could not tell a bigger lie.

Image: Wast Water with clouds sitting on Great Gable at the east end of the lake.

More on fairy lights and volume decomposition (with ice cream included)

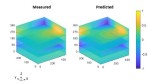

Last June, I wrote about representing five-dimensional data using a three-dimensional stack of transparent cubes containing fairy lights whose brightness varied with time and also using feature vectors in which the data are compressed into a relatively short string of numbers [see ‘Fairy lights and decomposing multi-dimensional datasets’ on June 14th, 2023]. After many iterations, we have finally had an article published describing our method of orthogonally decomposing multi-dimensional data arrays using Chebyshev polynomials. In this context, orthogonal means that components of the resultant feature vector are statistically independent of one another. The decomposition process consists of fitting a particular form of polynomials, or equations, to the data by varying the coefficients in the polynomials. The values of the coefficients become the components of the feature vector. This is what we do when we fit a straight line of the form y=mx+c to set of values of x and y and the coefficients are m and c which can be used to compare data from different sources, instead of the datasets themselves. For example, x and y might be the daily sales of ice cream and the daily average temperature with different datasets relating to different locations. Of course, it is much harder for data that is non-linear and varying with w, x, y and z, such as the intensity of light in the stack of transparent cubes with fairy lights inside. In our article, we did not use fairy lights or icecream sales, instead we compared the measurements and predictions in two case studies: the internal stresses in a simple composite specimen and the time-varying surface displacements of a vibrating panel.

Last June, I wrote about representing five-dimensional data using a three-dimensional stack of transparent cubes containing fairy lights whose brightness varied with time and also using feature vectors in which the data are compressed into a relatively short string of numbers [see ‘Fairy lights and decomposing multi-dimensional datasets’ on June 14th, 2023]. After many iterations, we have finally had an article published describing our method of orthogonally decomposing multi-dimensional data arrays using Chebyshev polynomials. In this context, orthogonal means that components of the resultant feature vector are statistically independent of one another. The decomposition process consists of fitting a particular form of polynomials, or equations, to the data by varying the coefficients in the polynomials. The values of the coefficients become the components of the feature vector. This is what we do when we fit a straight line of the form y=mx+c to set of values of x and y and the coefficients are m and c which can be used to compare data from different sources, instead of the datasets themselves. For example, x and y might be the daily sales of ice cream and the daily average temperature with different datasets relating to different locations. Of course, it is much harder for data that is non-linear and varying with w, x, y and z, such as the intensity of light in the stack of transparent cubes with fairy lights inside. In our article, we did not use fairy lights or icecream sales, instead we compared the measurements and predictions in two case studies: the internal stresses in a simple composite specimen and the time-varying surface displacements of a vibrating panel.

The image shows the normalised out-of-plane displacements as the colour as a function of time in the z-direction for the surface of a panel represented by the xy-plane.

Source:

Amjad KH, Christian WJ, Dvurecenska KS, Mollenhauer D, Przybyla CP, Patterson EA. Quantitative Comparisons of Volumetric Datasets from Experiments and Computational Models. IEEE Access. 11: 123401-123417, 2023.

Evolutionary model of knowledge management

Towards the end of last year, I wrote about the challenges in deploying digital technologies in holistic approaches to knowledge management in order to gain organizational value and competitive advantage [see ‘Opportunities lost in knowledge management using digital technology’ on October 25th, 2023]. Almost on the last working day of 2023, we had an article published in PLOS ONE (my first in the journal) in which we explored ‘The impact of digital technologies on knowledge networks in two engineering organizations’. We used social network analysis and semi-structured interviews to investigate the culture around knowledge management, and the deployment of digital technologies in support of it, in an engineering consultancy and an electricity generator. The two organizations had different cultures and levels of deployment of digital technologies. We proposed a new evolutionary model of the culture of knowledge management based on Hudson’s evolutional model of safety culture that is widely used in industry. Our new model is illustrated in the figure from our article, starting from ‘Ignored: we have no knowledge management and no plans for knowledge management’ through to ‘Embedded: knowledge management is integrated naturally into the daily workflow’. We also proposed that social networks could be used as an indicator of the stage of evolution of knowledge management with low network density and dispersed networks representing higher stages of evolution, based on our findings for the two engineering organizations.

Hudson, P.T.W., 2001. Safety management and safety culture: the long, hard and winding road. Occupational health and safety management systems, pp.3-32, 2001

Patterson EA, Taylor RJ, Yao Y. The impact of digital technologies on knowledge networks in two engineering organisations. PLoS ONE 18(12): e0295250, 2023.